Requirements

- Basic Knowledge of Python (functions, packages, data handling)

- Understanding of Machine Learning fundamentals (training, testing, evaluation)

- Familiarity with Git & Version Control (optional but recommended)

- Basic Linux/Command Line knowledge (navigating, running scripts)

- Cloud Basics (AWS, Azure, or GCP – helpful but not mandatory)

- Interest in Automation & DevOps Concepts (CI/CD, Docker, Kubernetes)

Features

- Comprehensive Curriculum – Covers MLflow, Docker, Kubernetes, Airflow, CI/CD, and Cloud Deployment.

- Hands-On Learning – Work on real-time projects and build end-to-end ML pipelines.

- Industry Expert Trainers – Learn from professionals with years of experience in AI, ML, and DevOps.

- Experiment Tracking & Model Monitoring – Learn best practices for production ML.

- Cloud Integration – Deployment on AWS, Azure, and GCP platforms.

- Flexible Learning Options – Online & Offline batches with recorded sessions.

- Placement Assistance – Resume building, mock interviews, and job referrals.

- Certification – Industry-recognized course completion certificate.

- Community & Support – Access to learning materials, Q&A forums, and mentorship.

Target audiences

- Aspiring Data Scientists & AI Enthusiasts who want to learn how to take ML models into production.

- Machine Learning & Deep Learning Practitioners aiming to automate workflows with CI/CD and pipelines.

- Software Developers & DevOps Engineers looking to expand into MLOps and model deployment.

- Cloud Engineers & System Administrators who want to manage ML workloads using Docker, Kubernetes, and cloud platforms.

- Students & Fresh Graduates (CS, IT, AI/ML, Data Engineering) preparing for careers in Data Science and MLOps.

- Working Professionals & Career Switchers seeking to upskill in one of the fastest-growing fields in AI engineering.

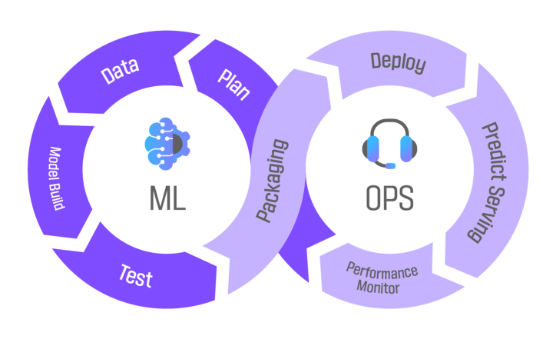

Course Overview

Course Content

- Deployment options: batch, real-time, streaming

- Serving ML models with Flask / FastAPI

- Model serving with TensorFlow Serving / TorchServe

- REST API & gRPC endpoints for ML models

- Canary deployments, blue-green deployment strategies

- Hands-on: Deploy ML model as REST API on Azure Web App / AWS Sagemaker / GCP AI Platform

- Versioning & Tracking: Git, DVC, MLflow, Weights & Biases

- CI/CD: Jenkins, GitHub Actions, GitLab CI

- Containers & Orchestration: Docker, Kubernetes, Helm

- Model Serving: Flask, FastAPI, TensorFlow Serving, TorchServe

- Pipelines: Airflow, Kubeflow, TFX, Prefect

- Cloud Platforms: AWS Sagemaker, Azure ML, GCP Vertex AI Monitoring: Prometheus, Grafana, ELK Stack